Compiled by Nancy Brannon

If you are a Facebook user, you can connect with family and friends, wish one another Happy Birthday, spread the word about horses and dogs rescued, see horse performance videos, and much more. You are also likely to encounter information that is dubious in its accuracy, photos of foods that folks are eating, and photos of dilapidated farm equipment, among various other items. There have been exposés written about how Facebook and other social media platforms utilize and sell individual user information for profit. The latest investigation of Facebook reveals how the social media platform has enabled the proliferation of misinformation and hate speech.

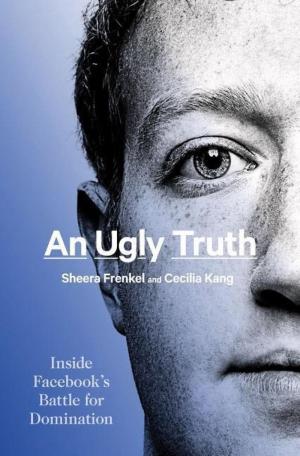

New York Times journalists Sheera Frenkel and Cecilia Kang investigate the “downside of the social media platform” Facebook in their new book, An Ugly Truth: Inside Facebook’s Battle for Domination. Frenkel is based on San Francisco and covers cybersecurity for the Times; Kang is based in Washington, D.C. and covers technology and regulatory policy. Their book focuses on the period between the 2016 presidential campaign and the January 6 insurrection at the U.S. Capitol. They were interviewed on NPR’s Fresh Air on July 13, 2021.

Frenkel said that “the scale of Facebook is nearly 3 billion users around the world. The amount of content that courses through the platform every day is just so enormous.” Yet users give little or no thought to what’s happening behind the scenes. And each time we log back on, Facebook just makes more money.

Misinformation

Frenkel tells Fresh Air host Terry Gross about how the social media platforms handle misinformation: “These social media companies are all struggling with how to handle misinformation and disinformation. Along the lines of misinformation, it is a very current and present danger in that just recently, the chief of staff of the White House, Ron Klain, was saying that when the White House reaches out to Americans and asks why aren’t they getting vaccinated, they hear misinformation about dangers with the vaccine. And he said that the No. 1 place where they find that misinformation is on Facebook.”

Kang added: “Facebook allowed politicians to post ads without being fact checked. And politicians could say things in advertisements that the average Facebook user could not.”

The book reveals how Facebook struggled with how to handle falsehoods, divisive rhetoric, posts promoting extremist ideology, and where to draw the line on junk news sites that played on emotion for clicks. The authors say that it has not dealt well with manipulated videos and photos that promulgate distorted images of people. Facebook was found to be one of the main platforms that rioters used to organize the January 6 insurrection at the Capitol. And in 2020, the coronavirus pandemic brought a new wave of misinformation about the disease and the vaccinations.

The most compelling part of the book is a company employee’s discovery of Russian-generated disinformation during the 2016 election campaign. The authors reveal how Alex Stamos, head of Facebook Security in 2016, found the Russian disinformation campaign about the 2016 election, saw how it was spreading on the platform and possibly manipulating American voters, and made his request for a meeting to inform C.O.O. Sheryl Sandberg and C.E.O. Mark Zuckerberg. The authors note that Facebook employees are reluctant to bring their leaders bad news because of the pressure to grow Facebook’s audience and revenue.

Editor’s note:

The difference between misinformation and disinformation: misinformation is false information that is spread, regardless of intent to mislead. Disinformation is knowingly spreading misinformation with the intent to mislead. It is also used to mean deliberately misleading or biased information; manipulated narrative or facts; propaganda.

Hate Speech

Frenkel explained the difficulty in controlling hate speech: “Even though “Facebook has put in A.I., artificial intelligence, as well as hired thousands of content moderators to try to detect this [hate speech], they're really far behind.”

Kang added, “A lot of this hate speech is happening in private groups. This is something Facebook launched just a few years ago, this push towards privacy, towards private groups….It's a matter of Facebook creating these kind of secluded, private, walled groups where hate speech can happen and it’s not being found” by Facebook’s algorithms.

In her review of the book for the New York Times, Sarah Frier explains that “The book’s title alludes to an internal posting written by one of Facebook’s longest-tenured executives, Andrew Bosworth, which he called “The Ugly.” In the 2016 memo, he explained that Facebook cares more about adding users than anything else. “The ugly truth is that we believe in connecting people so deeply that anything that allows us to connect more people more often is [considered] de facto good,” he wrote. “That can be bad if they make it negative. Maybe it costs a life by exposing someone to bullies. Maybe someone dies in a terrorist attack coordinated on our tools. And still we connect people.”

Sarah Frier is also author of “No Filter: The Inside Story of Instagram.”

Listen to the full Fresh Air interview at: https://www.npr.org/2021/07/13/1015483097/an-ugly-truth-how-facebook-enables-hate-and-disinformation

Read Sarah Frier’s review of this book for the New York Times at: https://www.nytimes.com/2021/07/09/books/review/the-ugly-truth-sheera-frenkel-and-cecilia-kang.html

Read Susan Benkelman’s review of the book for The Washington Post at: https://www.washingtonpost.com/outlook/facebooks-strategy-avert-disaster-apologize-and-keep-growing/2021/07/09/27602114-df53-11eb-ae31-6b7c5c34f0d6_story.html

If you are a Facebook user, you can connect with family and friends, wish one another Happy Birthday, spread the word about horses and dogs rescued, see horse performance videos, and much more. You are also likely to encounter information that is dubious in its accuracy, photos of foods that folks are eating, and photos of dilapidated farm equipment, among various other items. There have been exposés written about how Facebook and other social media platforms utilize and sell individual user information for profit. The latest investigation of Facebook reveals how the social media platform has enabled the proliferation of misinformation and hate speech.

New York Times journalists Sheera Frenkel and Cecilia Kang investigate the “downside of the social media platform” Facebook in their new book, An Ugly Truth: Inside Facebook’s Battle for Domination. Frenkel is based on San Francisco and covers cybersecurity for the Times; Kang is based in Washington, D.C. and covers technology and regulatory policy. Their book focuses on the period between the 2016 presidential campaign and the January 6 insurrection at the U.S. Capitol. They were interviewed on NPR’s Fresh Air on July 13, 2021.

Frenkel said that “the scale of Facebook is nearly 3 billion users around the world. The amount of content that courses through the platform every day is just so enormous.” Yet users give little or no thought to what’s happening behind the scenes. And each time we log back on, Facebook just makes more money.

Misinformation

Frenkel tells Fresh Air host Terry Gross about how the social media platforms handle misinformation: “These social media companies are all struggling with how to handle misinformation and disinformation. Along the lines of misinformation, it is a very current and present danger in that just recently, the chief of staff of the White House, Ron Klain, was saying that when the White House reaches out to Americans and asks why aren’t they getting vaccinated, they hear misinformation about dangers with the vaccine. And he said that the No. 1 place where they find that misinformation is on Facebook.”

Kang added: “Facebook allowed politicians to post ads without being fact checked. And politicians could say things in advertisements that the average Facebook user could not.”

The book reveals how Facebook struggled with how to handle falsehoods, divisive rhetoric, posts promoting extremist ideology, and where to draw the line on junk news sites that played on emotion for clicks. The authors say that it has not dealt well with manipulated videos and photos that promulgate distorted images of people. Facebook was found to be one of the main platforms that rioters used to organize the January 6 insurrection at the Capitol. And in 2020, the coronavirus pandemic brought a new wave of misinformation about the disease and the vaccinations.

The most compelling part of the book is a company employee’s discovery of Russian-generated disinformation during the 2016 election campaign. The authors reveal how Alex Stamos, head of Facebook Security in 2016, found the Russian disinformation campaign about the 2016 election, saw how it was spreading on the platform and possibly manipulating American voters, and made his request for a meeting to inform C.O.O. Sheryl Sandberg and C.E.O. Mark Zuckerberg. The authors note that Facebook employees are reluctant to bring their leaders bad news because of the pressure to grow Facebook’s audience and revenue.

Editor’s note:

The difference between misinformation and disinformation: misinformation is false information that is spread, regardless of intent to mislead. Disinformation is knowingly spreading misinformation with the intent to mislead. It is also used to mean deliberately misleading or biased information; manipulated narrative or facts; propaganda.

Hate Speech

Frenkel explained the difficulty in controlling hate speech: “Even though “Facebook has put in A.I., artificial intelligence, as well as hired thousands of content moderators to try to detect this [hate speech], they're really far behind.”

Kang added, “A lot of this hate speech is happening in private groups. This is something Facebook launched just a few years ago, this push towards privacy, towards private groups….It's a matter of Facebook creating these kind of secluded, private, walled groups where hate speech can happen and it’s not being found” by Facebook’s algorithms.

In her review of the book for the New York Times, Sarah Frier explains that “The book’s title alludes to an internal posting written by one of Facebook’s longest-tenured executives, Andrew Bosworth, which he called “The Ugly.” In the 2016 memo, he explained that Facebook cares more about adding users than anything else. “The ugly truth is that we believe in connecting people so deeply that anything that allows us to connect more people more often is [considered] de facto good,” he wrote. “That can be bad if they make it negative. Maybe it costs a life by exposing someone to bullies. Maybe someone dies in a terrorist attack coordinated on our tools. And still we connect people.”

Sarah Frier is also author of “No Filter: The Inside Story of Instagram.”

Listen to the full Fresh Air interview at: https://www.npr.org/2021/07/13/1015483097/an-ugly-truth-how-facebook-enables-hate-and-disinformation

Read Sarah Frier’s review of this book for the New York Times at: https://www.nytimes.com/2021/07/09/books/review/the-ugly-truth-sheera-frenkel-and-cecilia-kang.html

Read Susan Benkelman’s review of the book for The Washington Post at: https://www.washingtonpost.com/outlook/facebooks-strategy-avert-disaster-apologize-and-keep-growing/2021/07/09/27602114-df53-11eb-ae31-6b7c5c34f0d6_story.html